General purpose data filters

There are three general purpose filters available in Movebank that Data Managers can use to identify and flag location records as outliers:

See Section 10.5 for instructions to run the filters. If filters are run in the Event Editor, filters will be applied in the following order: (1) duplicate filter, (2) value range filter and (3) speed filter. From the Studies page, filters can be applied in any order. These general purpose data filters will only be applied to data that exists in the study at the time the filter is run. They will need to be re-applied to filter any new data added later through uploads or live feeds.

Duplicate filter

Duplicate records can end up in datasets for a variety of reasons, and can cause problems with data analysis. The duplicate filter allows you to flag records with the same tag ID and timestamp as other records in the data. You can optionally require values in additional attributes to match in order to be considered a duplicate.

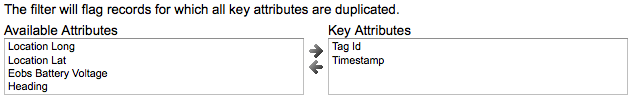

After initiating the filter, the filter key attribute options look like this:

To require the filter to look for matches in additional attributes, select each attribute from under Available attributes and click on  to add it to the list of Key attributes.

to add it to the list of Key attributes.

When you run the filter, it will go through records in order of event ID (based on the order in which they were imported, and the same order in which you see them in the Event Editor). By default, the first record of each set of duplicates will be retained, and any subsequent records for which the key attributes are duplicated will be flagged.

Setting advanced preferences: To further control which duplicates are flagged, you can designate a preferred value for any attribute when choosing which of the duplicate records to retain. This can be helpful if records with the same key attributes vary in quality or completeness. For example, you could preferentially retain duplicate records where transmission-protocol = GPRS or gps-activity is_not_null. This feature is currently only available when running filters from the Studies page.

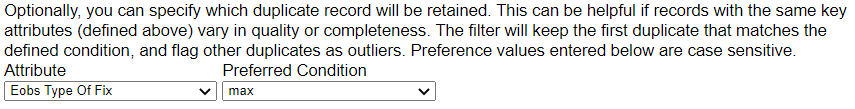

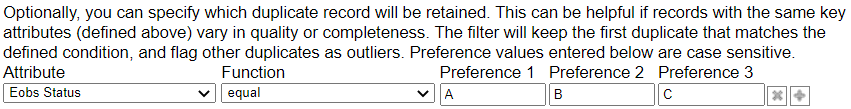

To set a preferred condition, select the desired attribute from the Attribute dropdown menu, then select an option from the second dropdown menu.

For numeric attributes, the second dropdown menu will be labeled Preferred Condition and will contain the options max, min, is_null, and is_not_null.

For character string attributes, the second dropdown menu will be labelled Function and will contain the options equal, contains, is_null, and is_not_null. When you have selected a function, you will need to enter a full (if function = equals) or partial (if function = contains) string into the Preference box for records you wish to keep. You can click + to designate up to five preferences. Multiple preferences will be prioritized from first to last.

Value range filter

The value range filter allows you to exclude records based on values for any attribute in the dataset. This can be used for a variety of purposes, for example to keep only records with latitude range -90 to 90, records with DOP values below a certain threshold, or records that are located outside a bounding box, such as a breeding site.

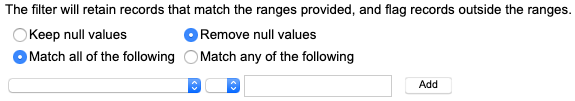

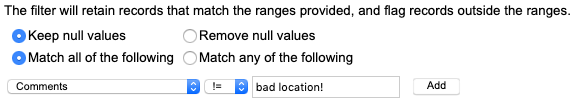

After initiating the filter, the filter options look like this:

-

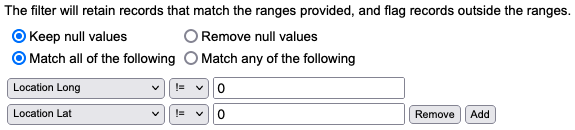

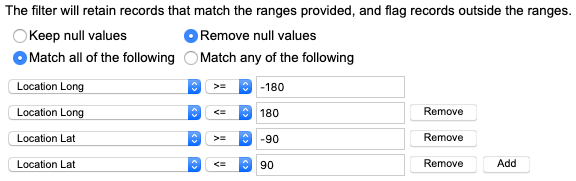

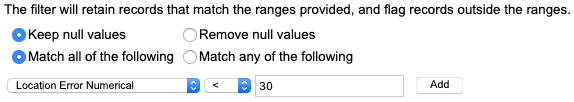

Specify whether to retain or flag (remove) null (empty) values for attributes included in the filter.

-

Choose whether the records to be retained must match all or any of the defined ranges.

-

From the dropdown list on the left, choose an attribute that you want to define a range for.

-

Select how you want to define values of records that should be retained. Records that fall outside defined ranges will be flagged as outliers:

-

For numeric attributes, the options will be

= (equal to)

!= (not equal to)

> (greater than)

< (less than)

>= (greater than or equal to)

<= (less than or equal to) -

For text attributes, options will be

= (equal to)

!= (not equal to) -

In attributes with a list of possible values, it will include that list of values.

-

Entries must use formats and units defined in the Movebank Attribute Dictionary.

-

-

In the field on the right, enter the value to define the range.

The following are some examples of how this filter could be used.

Example 1. Flag locations for which coordinates "0,0" have been provided as null values.

Example 2. Flag locations outside the ranges for coordinates in decimal degrees, as well as records with no latitude or longitude provided.

Example 3. Flag locations where "location error numerical" is more than 30 meters, keeping any records for which there is no value.

Example 4. Flag locations where "comments" say "bad location!"

Speed filter

Many outliers in tracking data can be removed by calculating the speed that would be required to go between consecutive locations, and excluding records that, if correct, would require that the animal had been traveling at an unrealistically high speed. The speed filter flags records that imply unrealistic speeds, with an optional buffer to account for the accuracy of your location estimates. Before running the speed filter, be sure that your deployment periods are correctly set. Accidentally including locations in the track that were collected before or after tags were deployed on animals could lead to unexpected results.

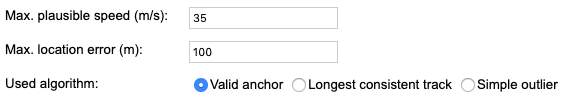

After initiating the filter, the filter options look like this:

- Default values are not based on information about your analysis objectives or species. Modify these as needed based on your expert knowledge.

- Enter the maximum plausible ground speed for your animals, in meters per second. Remember that your species may be able to travel quite quickly sometimes, for example with favourable wind or currents, and you don't want to accidentally remove these records. Also consider your sampling interval—an animal's maximum speed over a short time interval might be much greater than it could maintain over a longer period. The value you use should represent a speed (in m/s) that it could maintain over the temporal interval in your dataset.

- Enter the maximum likely location error for your tag type, in meters. Even a fairly small location error could lead to records that suggest a high speed of movement. Also, you might accept a more or less conservative error estimate depending on the type of analysis you will be doing.

- Choose a filter algorithm. All speed filter algorithms ignore records with no location, undeployed records, and records already marked as outliers (in “manually marked outlier” or “algorithm marked outlier”). A record is considered valid if it meets the filter settings when compared to neighboring records according to these rules:

Simple outlier: This method tests the filter settings for each record against the previous and subsequent records. If both neighboring locations require an implausible speed, the record is marked as an outlier. Avoid this algorithm if your tracks are likely to contain groups of >2 consecutive outliers near each other or outliers as the first or last records in the track.

Valid anchor: This method assumes that the first location in the track is accurate (not an outlier). For all other records, it tests the filter settings against the subsequent record. If movement to the next location (from n to n+1) requires an implausible speed, the subsequent record (n+1) is marked as an outlier, and the current record (n) is tested against the next record (n+2), and so on, until a plausible next location is found. Be sure that you have set your deployment start times correctly and that the first location in each track appears correct (if not, you can manually mark them as outliers before running the filter).

Longest consistent track: This method finds the longest sequence of points in the track that is fully consistent. It will “walk through” each record in the dataset and begin a new “candidate track” for the first record and when a record does not pass the filter settings when compared to the previous point. If the current record fits with more than one candidate track based upon the filter settings, it will be assigned to the longest candidate track. After running through the entire track, it will use the longest candidate track as the correct one and flag records not included in this track as outliers. This method makes it possible to catch outliers at the beginning or ends of a track, as well as groups of outliers near each other. Avoid this algorithm if your track may contain an outlier cluster with more records than the entire valid track (for example, in the case of a short tracks with lots of outliers or without correct deployment periods).

Check your results after running the filter. Here are some examples of things to look out for:

- The filter flags locations directly before or after true outliers, but does not flag the real outlier. If this happens, the outliers might still be visible after running the filter. You can improve performance by manually flagging obvious outliers prior to running a speed filter.

- Valid locations during high-speed migratory flights are flagged, but increasing the maximum speed to retain them will prevent outliers during stationary periods from being flagged. You can override results for these records by assigning the value "true" to the attribute manually marked valid.

Quality control of uploaded data

Define deployments and outliers

General purpose data filters